VMware NIC Trunking Design

27 Aug 2010 by Simon Greaves

Having read various books, articles, white papers and best practice guides I have found it difficult to find consistently good advice on vNetwork and physical switch teaming design so I thought I would write my own based on what I have tested and configured myself.

To begin with I must say I am no networking expert and may not cover some of the advanced features of switches, but I will provide links for further reference where appropriate.

The basics

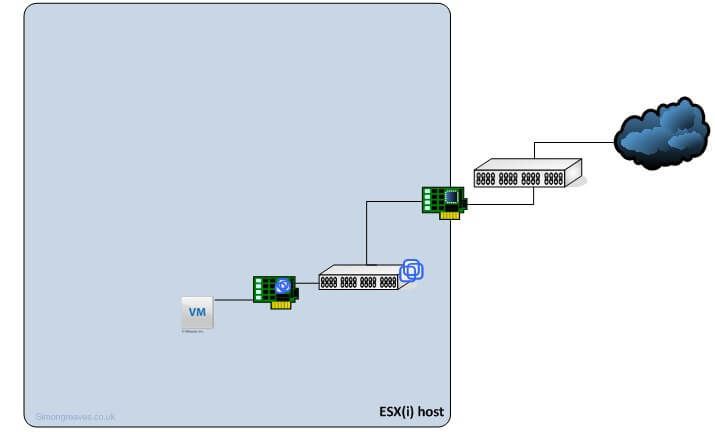

Each physical ESX(i) host has at least one physical NIC (pNIC) which is called an uplink.

Each uplink is known to the ESX(i) host as a vmnic.

Each vmnic is connected to a virtual switch (vSwitch).

Each virtual machine on the ESX(i) host has at least one virtual NIC (vNIC) which is connected to the vSwitch.

The virtual machine is only aware of the vNIC, only the vSwitch is aware of the uplink to vNIC relationship.

This setup offers a one to one relationship between the virtual machine (VM) connected to the vNIC and the pNIC connected to the physical switch port, as illustrated below.

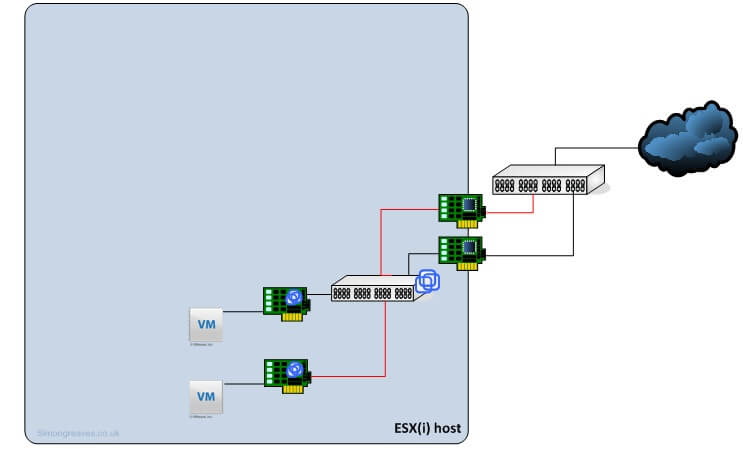

When adding another virtual machine a second vNIC is added, this in turn is connected to the vSwitch and they share that same pNIC and the physical port the pNIC is connected to on the physical switch (pSwitch).

When adding more physical NIC’s we then have additional options with network teaming.

NIC Teaming

NIC teaming offers us the option to use connection based load balancing, which is balanced by the number of connections and not on the amount of traffic flowing over the network.

This load balancing can provide us resilience on our connections by monitoring the links and if a link goes down, whether it’s the physical NIC or physical port on the switch, it will resend that traffic over the remaining uplinks so that no traffic is lost. It is also possible to use multiple physical switches provided they are all on the same broadcast range. What it will not do is to allow you to send traffic over multiple uplinks at once, unless you configure the physical switches correctly.

There are four options with NIC teaming, although the fourth is not really a teaming option.

- Port-based NIC teaming

- MAC address-based NIC teaming

- IP hash-based NIC teaming

- Explicit failover

Port-based NIC teaming

Route based on the originating virtual port ID or port-based NIC teaming as it is commonly known as will do as it says and route the network traffic based on the virtual port on the vSwitch that it came from. This type of teaming doesn’t allow traffic to be spread across multiple uplinks. It will keep a one to one relationship between the virtual machine and the uplink port when sending and receiving to all network devices. This can lead to a problem where the amount of physical ports exceeds the number of virtual ports as you would then end up with uplinks that don’t do anything. As such the only time I would recommend using this type of teaming is when the amount of virtual NIC’s exceeds the number of physical uplinks.

MAC address-based NIC teaming

Route based on MAC hash or MAC address-based NIC teaming sends the traffic out of the originating vNIC’s MAC address. This works in a similar way to the port-based NIC teaming in that it will send its network traffic over only one uplink. Again the only time I would recommend using this type of teaming is when the amount of virtual NIC’s exceeds the number of physical uplinks.

IP hash-based NIC teaming

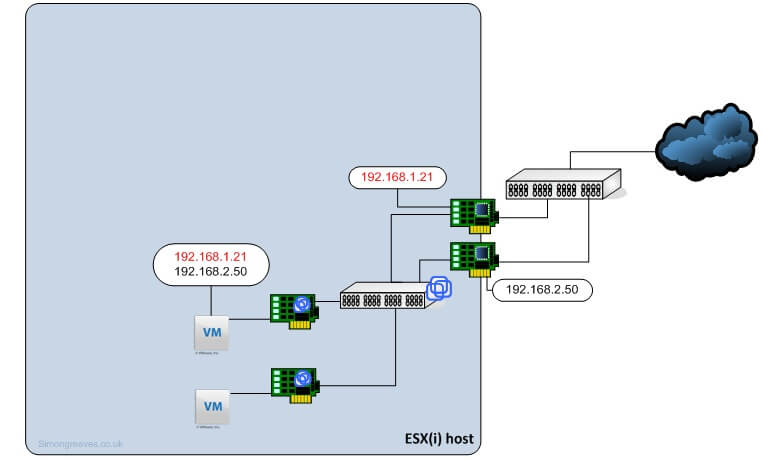

Route based on IP hash or IP hash-based NIC teaming works differently from the other types of teaming. It takes the source and destination IP address and creates a hash. It can work on multiple uplinks per VM and spread its traffic across multiple uplinks when sending data to multiple network destinations.

Although IP-hash based can utilise multiple uplinks it will only use one uplink per session. This means that if you are sending a lot of data between one virtual machine and another server that traffic will only transfer over one uplink. Using the IP hash based teaming we can then use teaming or trunking options on the physical switches. (Depending on the switch type) IP hash requires Ether Channel (again depending on switch type) which for all other purposes should be disabled.

Explicit failover

This allows you to override the default ordering of failover on the uplinks. The only time I can see this being useful is if the uplinks are connected to multiple physical switches and you wanted to use them in a particular order. Either that or you think a pNIC In the ESX(i) host is not working correctly. If you use this setting it is best to configure those vmnics or adapters as standby adapters as any active adapters will be used from the highest in the order and then down.

The other options

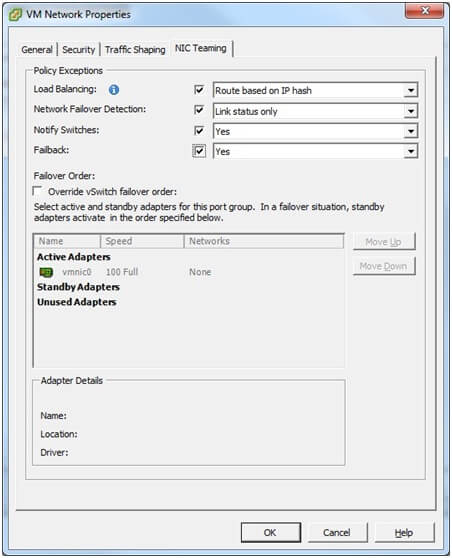

Network failover detection

There are two options for failover detection. Link status only and beacon probing. Link status only will monitor the status of that link, to ensure that a connection is available on both ends of the network cable. If it becomes disconnected it will mark it as unusable and send the traffic over the remaining NIC’s. Beacon probing will send a beacon up the network on all uplinks in the team. This includes checking that the port on the pSwitch is available and is not being blocked by configuration or switch issues. Further information is available on page 44 of the ESXi configuration guide. Do not set to beacon probing if using route based on IP-hash.

Notify switches

This should be left set to yes (default) to minimise route table reconfiguration time on the pSwitches. Do not use this when configuring Microsoft NLB in unicast mode.

Failback

Failback will re-enable the failed uplink when it is working correctly and send the traffic over it that was sent over the standby uplink. Best practice is to leave this set to yes unless using IP based storage. This is because if the link were to go up and down quickly it could have a negative impact on iSCSI traffic performance.

Incoming traffic is controlled by the pSwitch routing the traffic to the ESX(i) host, and hence the ESX(i) host has no control over which physical NIC traffic arrives. As multiple NIC’s will be accepting traffic, the pSwitch will use whichever one it wants.

Load balancing on incoming traffic can be achieved by using and configuring a suitable pSwitch.

pSwitch configuration

The topics covered so far describe egress NIC teaming, with physical switches we have the added benefit of using ingress NIC teaming.

Various vendors support teaming on the physical switches, however quite a few call trunking teaming and vice-versa.

From the switches I have configured I would recommend the following.

All Switches

A lot of people recommend disabling Spanning Tree Protocol (STP) as vSwitches don’t require it as they know the MAC address of every vNIC connected to it. I have found that the best practice is to enable STP and set it to Portfast. Without Portfast enabled there can be a delay whereby the pSwitch has to relearn the MAC addresses again during convergence which can take 30-50 seconds. Without STP enabled there is a chance of loops not being detected on the pSwitch.

802.3ad & LACP

Link aggregation control protocol (LACP) is a dynamic link aggregation protocol (LAG) which can dynamically make other switches aware of the multiple links and combine them into one single logical unit. It also monitors those links and if a failure is detected it will remove that link from the logical unit.

In vSphere 4.x and early 5.x versions, VMware doesn’t support LACP. (Note: This is no longer true in vSphere 6.x and onwards, this is quite an old article but the rest of the guide still holds true) However VMware does support IEEE 802.3ad which can be achieved by configuring a static LACP trunk group or a static trunk. The disadvantage of this is that if one of those links goes down, 802.3ad static will continue to send traffic down that link.

Update: LAGs have a small use case in vSphere networks, such as IP storage, however the administrative overhead involved in setting them up quite often outweighs the perceived benefits

Don’t use LACP in vSphere unless you have to

Dell switches

Set Portfast using

Spanning-tree portfast

To configure follow my Dell switch aggregation guide

Further information on Dell switches is available through the product manuals.

Cisco switches

Set Portfast using

Spanning-tree portfast (for an access port)

Spanning-tree portfast trunk (for a trunk port)

Set etherchannel

Further information is available through the Sample configuration of EtherChannel / Link aggregation with ESX and Cisco/HP switches

HP switches

Set Portfast using

Spanning-tree portfast (for an access port)

Spanning-tree portfast trunk (for a trunk port)

Set static LACP trunk using

trunk < port-list > < trk1 ... trk60 > < trunk | lacp >

Further information is available through the Sample configuration of EtherChannel / Link aggregation with ESX and Cisco/HP switches

Tagged with: vSphere

Comments are closed for this post.