vSphere 5 - What's New (and relevant for the VCP 5) (Part 2)

13 Nov 2011 by Simon Greaves

This is part 2 of my guide, you can read part 1 here

Storage

Quite a few improvements have been made to storage with vSphere 5.

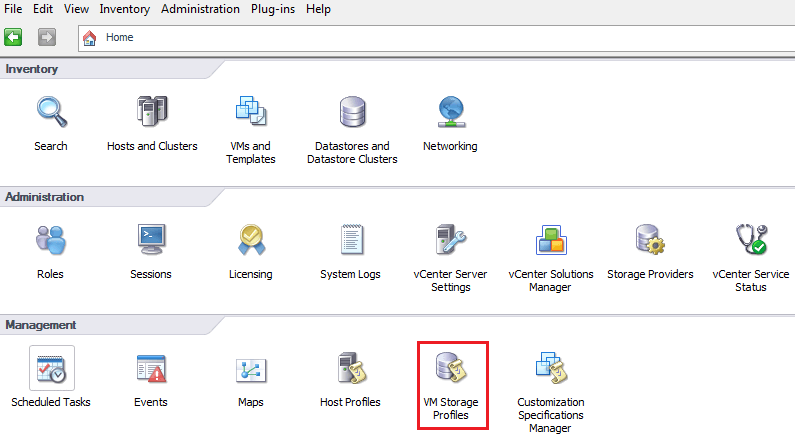

Storage Profiles

Profile driven storage allows SLAs to be set to certain storage types, For example this together with Storage DRS (explained below) can be used to automatically keep storage tiered so that high I/O VMs can remain on SSD drives. Profile driven storage uses VASA, the vSphere Aware Storage API and vCenter to continually monitor the storage to ensure that the SLA’s for the storage profile are being met.

You can configure Storage Profiles from the Home screen in vCenter Server.

vSphere Storage Appliance

The vSphere Storage Appliance (VSA) is designed as a way of providing the cool features of vSphere, such as vMotion, HA and DRS without the requirement for an expensive SAN array. It is made up of two or three ‘greenfield’ ESXi servers which act as dedicated storage devices. It is not possible to have any virtual machines running on these servers, but the costs of such servers can be much cheaper than a dedicated SAN.

The VSA creates a VSA Cluster. It uses shared datastores for all hosts in the cluster and stores a replica of each shared datastore on the other hosts in the cluster. It presents this local storage as mirrored NFS datastores, where-by the mirror is the copy of the NFS datastore on one of the other ESXi host in the cluster.

Graphic courtesy of VMware.com

The vSphere Storage Appliance is managed by VSA manager through the vCenter Server. The VSA manager allows replacement of a failed VSA cluster member and recovery of an existing VSA cluster.

To prevent split-brain scenarios a majority node cluster is required.

With a three-node cluster a majority of two nodes is required and with a two-node cluster a VSA Cluster Service acts as a tertiary node. The VSA Cluster Service run on vCenter Server.

The VSA cluster will enable vMotion and HA on the cluster for the virtual machines that are running on the VSA cluster.

It is an important design consideration that the storage requirements for the hosts in a VSA cluster will at least double to an account for the volume replicas that are created.

Limitations

No VMs on the ESXi hosts participating in the VSA cluster

No virtual vCenter Server anywhere on the cluster

One datastore on each server, and it must be local storage

Only a short list of servers officially supported, however it will probably work with most

ESXi hosts must be the same hardware configuration

Minimum 6GB RAM per host

RAID controller that supports RAID 10 per host

VSA network traffic is spilt into front end and back end traffic

Front End: enables communication between

- Each VSA cluster member and VSA manager

- ESXi and NFS volumes

- Each VSA cluster member and the VSA cluster service

Back End: enables communication between

- Replication between NFS volume and its replica that resides on another host

- Cluster communication between all VSA cluster members

- vMotion and Storage vMotion traffic between the hosts

Further information is available in the vSphere Storage Appliance Technical Whitepaper & Evaluation Guide

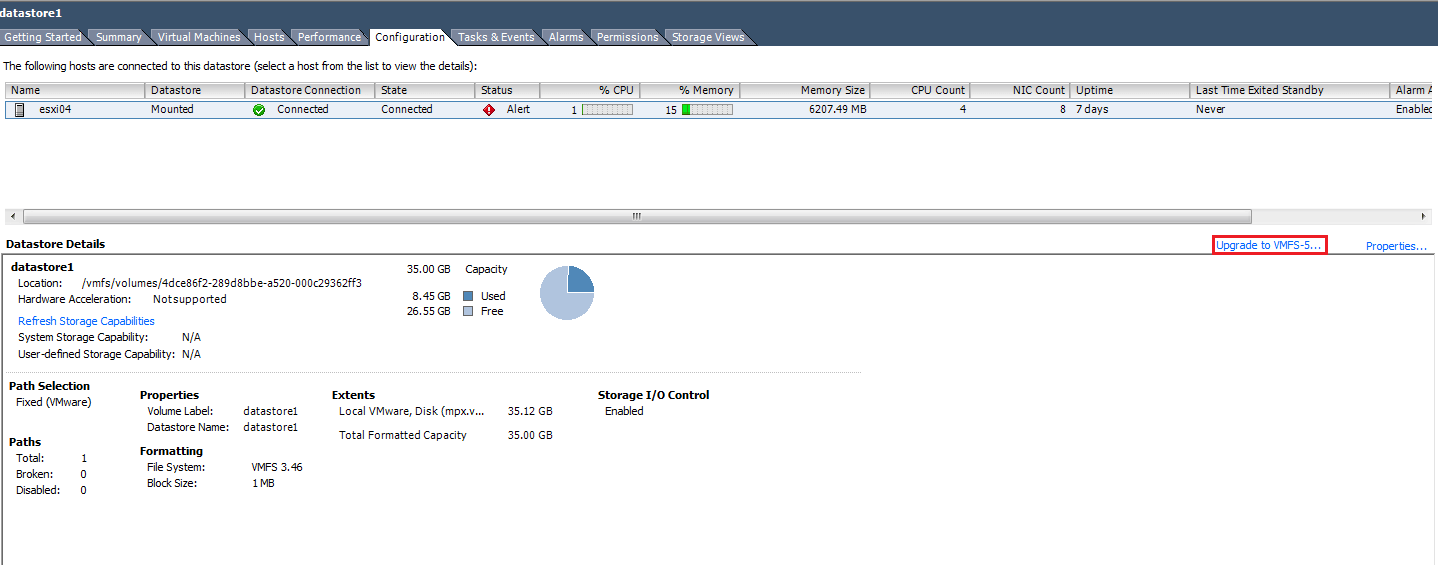

VMFS5

VMFS5 uses the GUID partition table (GPT) format partitioning table rather than MBR partitioning table, this allows for much larger partition sizes. The maximum volume size is now 64TB. In fact a single extent can be up to 64TB and RDMs can be greater than 2TB, up to 64TB.

If upgrading to ESXi, it keeps the existing MBR format and block sizes. Once upgraded go to configuration tab in datastores and select upgrade to VMFS5, then it will support the larger sizes, however the underlying LUN will remain in the MBR format until it is reformatted.

Using GUID, all new datastore block sizes are now 1MB, however if you do upgrade then the block sizes will remain the same for that upgraded hosts datastores. Not that this is an issue as explained you will be able to support up to 64TB volumes.

The underlying sub-block is now 8KB rather than 64KB, these means that less space is wasted due to stranded data from small files in the larger blocks. Once sub blocks get to 1mb they will be in 1mb block sizes. If a file is less than 1kb size then the file is stored in the file descriptor, which is a 1kb file that sits on each storage array.

VMFS5 uses Atomic Test and Set (ATS) for all file locking, ATS is an advanced form of file locking with smaller overheads when accessing the storage metadata than what was used with VMFS3. ATS is part of vSphere Storage APIs for Array Integration (VAAI). ATS was available in v4.1.

vSphere API for Array Integration (VAAI)

As mentioned VAAI includes Atomic test and set (ATS) for file locking, as well as full copy, block zero and T10 compliance. VAAI also adds reduced CPU overhead on the host. Hardware acceleration for NAS has also been added. VAAI doesn’t use ATS for file locking with NFS datastores.

The following primitives are available for VAAI NAS.

Reserve space: enables storage arrays to allocate space for a VMDK in thick format

Full file clone: enables hardware-assisted offline cloning of offline virtual disk files

Fast file clone: Allows linked-clone creation to be offloaded to the array. Currently only supported with VMware View.

This means that NAS storage devices will now support Thin, Eager Zeroed Thick and Lazy Zeroed Thick format disks, allowing disk preallocation.

SSD enhancements

VMKernel can automatically detect, tag and enable an SSD LUN. This is particularly useful with Storage Profiles.

Storage IO Control (SIOC) enhancements

SIOC is now supported on NFS datastores. Behaviour of SIOC for NFS datastores (volumes) is similar to that for VMFS datastores (Volumes). Previously SIOC was only supported on Fibre Channel and iSCSI-connected storage.

If you want to set a limit based on MBps rather than IOPS, you can convert MBps to IOPS based on the typical I/O size for that virtual machine. For example to restrict a virtual machine with 64KB per I/Os to 10MBps set the limit to 160 IOPS. (IOPS = (MBps throughput / KB per IO) * 1024)

Storage vMotion

svMotion now supports snapshots. It no longer uses Change block tracking, instead it uses I/O mirroring mechanism, a single pass copy of the source to the destination disk, meaning shortened migration times.

Storage DRS

Storage DRS or SDRS is a cool new feature that as the name suggests allows for the distribution of resources on the storage LUNs. There are a couple of options to choose from when setting, from No Automation and Fully Automated. The automated load balancing is based on storage space utilization and disk latency.

At this time it is recommended that you configure storage DRS for all LUNs/VMs but set it to manual mode. This is recommended by VMware to allow you to double check the recommendations made by SDRS and then either accept the recommendations or not. The recommendations are based on disk usage and latency however I expect that soon it will prove itself a most valuable asset in cluster design, removing the need to work out how much space is required per LUN or where to place the disk intensive applications in the cluster. It is possible to disable SDRS on a schedule, for instance when performing a backup due to the increase in load on the datastores, you don’t want SDRS to start moving virtual disks around every time latency increases due to routine backups. SDRS needs to run for 16 hours for it to take effect.

SDRS requires Enterprise plus licensing.

A SDRS cluster is set through Inventory>Datastores and Datastore Clusters.

Further information on VMware website

Software Fibre Channel over Ethernet (FCoE)

vSphere 4 introduced support for Fibre Channel over Ethernet (FCoE) with hardware adapters, now software FCoE is supported in vSphere 5. So now it is possible to use NICs that support partial FCoE offload. NICs with partial FCoE Offload are hardware adapters that contains network and FC functionalities on the same card. It is also referred to as a converged network adapter (CNA)

To configure

- Connect the VMkernel to the physical FCoE NICs installed on the host

- activate the software FCoE adapters on the ESXi host so that the host can access the fibre channel storage

Only one VLAN is supported for software FCoE in vSphere, and you can have a maximum of four software FCoE adapters on one host.

Further information can be found in the Whats New vSphere 5 Technical Whitepaper

Coming Soon….Thin Provisioning

Ok not a new feature per-se, but improvements have been made with hardware acceleration for thin provisioning, it helps in reclaiming space and also in monitoring usage of thin provisioned arrays. It Works on VMFS3 and VMFS5 (Providing you are using ESXi 5)

With the older form of thin provisioning, problems can occur with accumulation of dead space. VMFS5 will be able to reclaim dead space by informing the array about the datastore space freed when files are deleted or removed by svMotion. It also monitors space usage. This feature monitors space usage on thin-provisioned LUNs and helps administrators avoid the out-of-space conditions with built in alarms.

Tagged with: vSphere

Comments are closed for this post.